There are a number of great reasons to consider a headless architecture for a web project. I sometimes hear site speed listed as a primary motivation. Is a headless website guaranteed to be faster? Let's explore this idea.

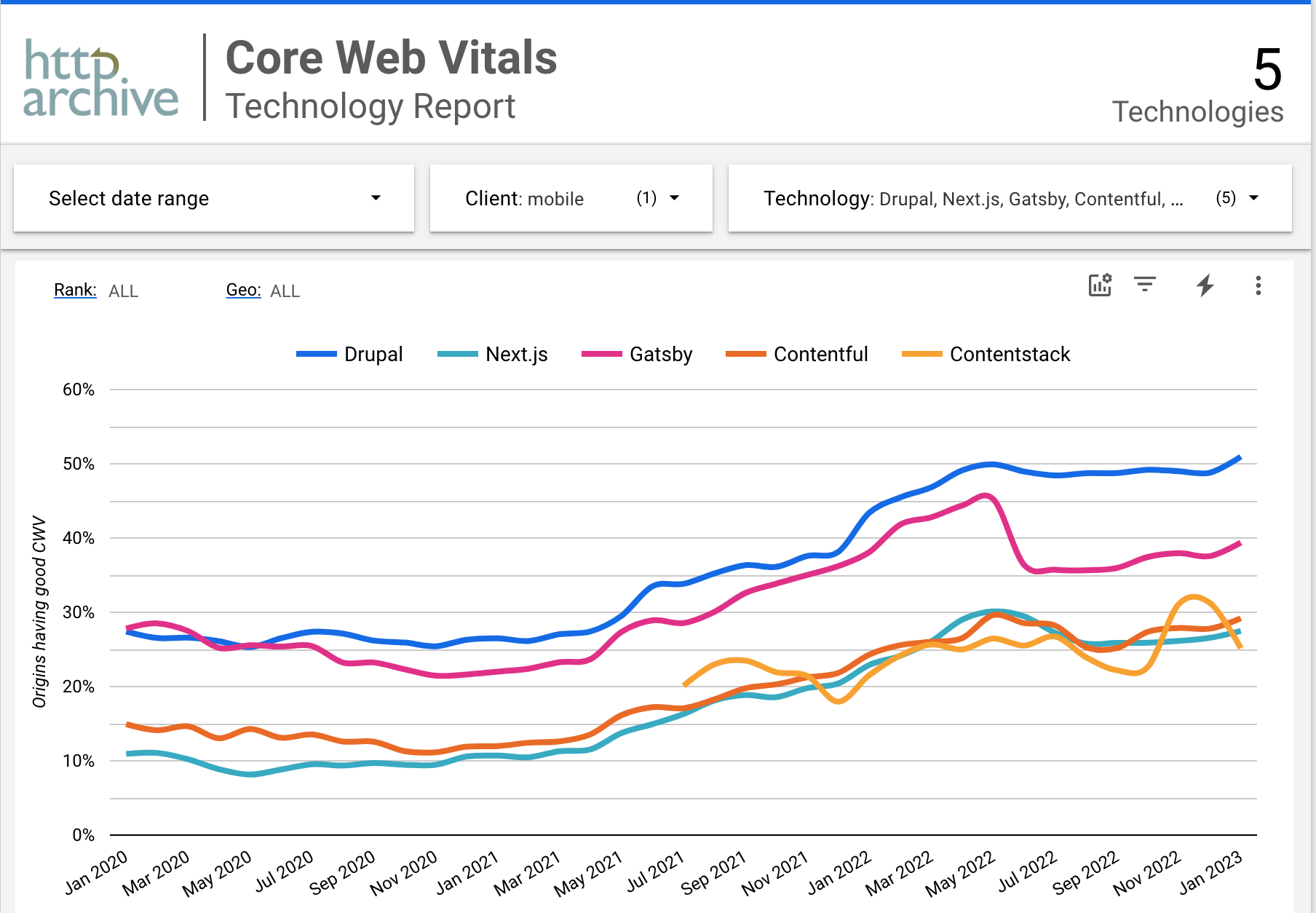

There are certainly many examples of fast headless websites online today. But there are, in fact, a lot of slow headless websites around as well. And according to Google and their Core Web Vitals reporting, a website using Drupal as its CMS is almost twice as likely to have a good score as one based on popular headless CMS options like Contentful or ContentStack.

Is the above an apples to comparison? Of course not. But we can say with certainty that any site using a headless-only CMS has a headless front end, and that many of those identified as using Drupal do not. Site speed is key to a good Core Web Vitals score, so based on Google's data a headless approach is no guarantee your site will be fast.

I’ve heard frameworks like Gatsby talk about how they're designed to be fast out of the box. The truth is, so is Drupal, which has a variety of caching layers, sophisticated image optimization capabilities, and more. A simple Drupal website runs fast without a lot of hardware behind it (my personal blog runs on a Raspberry Pi) but the truth is also that Drupal is often used on sites with more inherent complexity. In fact, for years the Drupal project’s stated focus was on “ambitious digital experiences” before recently shifting to “ambitious site builders”.

A Dig Into The Numbers

In my career, performance has been a particular area of focus. I’ve done performance audits for a variety of organizations that are household names, from internationally-recognized cultural institutions to global consumer packaged goods companies. Typically what I see is that well-crafted Drupal sites may have a handful pages where the HTML page actually renders slow, but most often (and particularly on the home page, which should usually be fully cacheable) if the site is on a solid hosting platform then site pages will typically have a first byte time of around 200-300ms. First Byte Time is the latency between when the request is delivered to the server and when the server starts to return a response. Often times this is the truest measurement of the time it takes for the server to prepare the page for delivery. As such, we can think of this as the maximum potential performance benefit to moving a site to a headless architecture.

To put this into context, I’ve seen the full negotiation of a single SSL connection take 500ms or more, or roughly twice a typical first byte time on a Drupal website. For retargeting on popular social platforms, an SSL connection can take even longer, though often those can be non-blocking for the overall page load.

As a point of comparison, let’s talk about how many sites load their fonts, directly from a popular source like Google. Serving those same fonts from locally hosted versions (again assuming that your hosting platform is solid, often including its own CDN) will be able serve the same files in approximately the same amount of transfer time (often 50ms or less per file, if optimized) without the additional SSL connection. In other words, for many Drupal sites switching to local copies of their web fonts could have twice the performance benefit of moving the entire site to headless, at a small fraction of the effort.

More typically where I see performance issues are around assets: Images, but also CSS and Javascript. I’ve written before about strategies for optimizing Drupal for image handling. It’s true that many headless frameworks come with some sophisticated image optimization out of the box, but really the same is true of Drupal, which I believe is directly reflected in the Core Web Vitals results we looked at above. I think Drupal also has a ton of upside in this area, with the opportunity to tune your site’s image handling in a variety of sophisticated ways, including manual control over the focal point as the images are re-cropped for different uses, which file format will be used and the compression strategies, and more.

As for the CSS and Javascript, it's true that historically Drupal may have contributed to site bloat by including sizeable libraries like jQuery and jQuery UI, but in recent years there has been significant effort to scale back what is loaded by default, as well as move to more modern approaches, including vanilla Javascript, where possible. I've seen some accounts that headless sites are also often guilty of bloat, particularly on the Javascript side, where it may be tempting to pull in a sizeable library to solve even a simple problem. Moment.js comes to mind as an example, not because it's huge, but because many sites load that library when they only need a small fraction of its capabilities.

Whether the architecture is headless or not, the biggest factors for performance really come down to the expertise and rigour applied to the implementation.

The True Value

This may be where we start to arrive at the biggest value of a headless architecture: the separation of concerns. In order to properly optimize the front end application, a team of React developers don't need to learn Drupalisms like how to attach (or remove) a library to a render array.

In a headless architecture, front end and back end teams only need to stay coordinated on what data needs to be available for the front end and in what format, and then they can be more independent in their day-to-day work, and more critically, in their deployments.

For organizations without much Drupal expertise this can be very attractive, since React (or other front end framework) talent is currently easier to find than skilled Drupal experts.

Asynchronous vs. Multi-Threaded

One important difference to understand between headless and traditional approaches relates to the underlying server technologies and how they process requests. Most headless sites run on Node.js, which has non-blocking, asynchronous processing. That means a headless front end is ideal for sites that are displaying information information that needs to update in real time. On the other hand, PHP, the most popular server language for dynamic websites, uses a multi-threaded architecture, which means that it can handle memory-intensive operations like processing large files without impacting other operations.

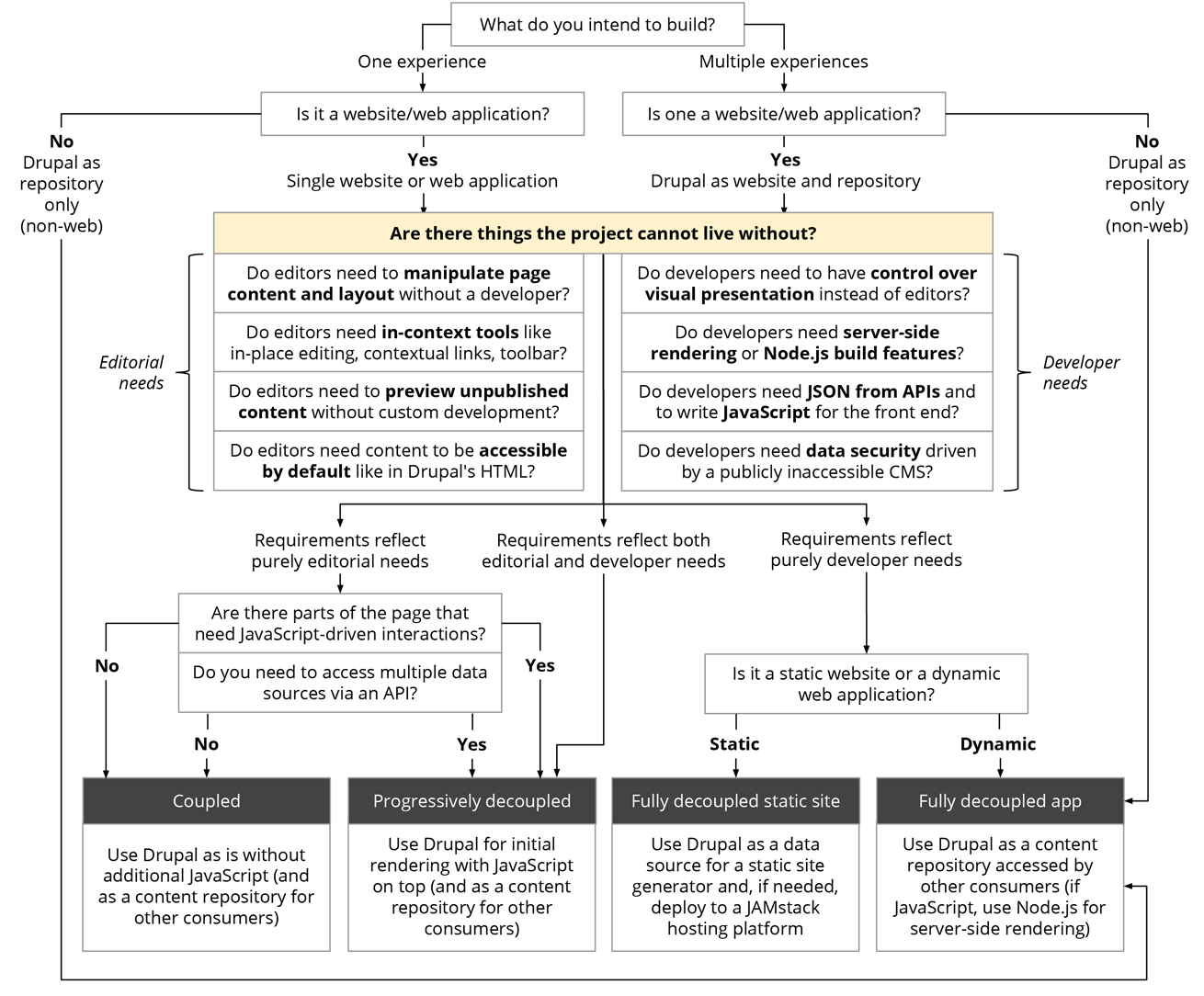

I would say that there are often additional trade-offs in terms of layout control, multilingual management, speed of site building, and more, but what I really wanted to capture in this article was some thoughts around how "headless" and "traditional" (or "monolithic", or as I like to call it, "unified") architectures compare on performance, and to look at the numbers that underlie many of the claims I've been hearing. A comprehensive discussion about the various considerations in choosing an architecture was explored in a blog post by Dries, which included this diagram:

Would moving your website to headless make it faster? There's a good chance it would. But if your site is currently running on an older version of Drupal, moving to modern version is also likely to yield substantial benefits. Choose the approach that best suits your requirements and the ongoing resources that will be available.

Comments4

10-15 years ago we realized…

10-15 years ago we realized that the core of performance is on the front end, not the backend. So we started looking more at things like image optimization rather than SQL queries. Now I think it’s time for another transition. On any modern sizeable site the biggest impact on performance is Google Tag Manager and all the third party code that websites are loading. It’s very hard to maintain GTM over time without it getting out of control. So headless vs unified, or Drupal vs something else is moot until Google brings its focus on performance to GTM.

Agreed about 3rd party assets

I agree that on many sites the overuse of third party code is often particularly egregious for performance. Based on even recent sites I've looked at I would say (anecdotally) that on-site images and Javascript are probably still a bigger factor, but 3rd party assets aren't far behind

Varnish / Redis ?

Should I be using using Varnish Cache and / or Redis on my traditional Drupal 10 sites? Do you think there is much benefit ? Are they used by Acquia?

In a word, yes

Caching of basically any kind is going to help, especially if you also implement a solution like the Purge module to invalidate the cache when something changes. In answer to your question about Acquia, they offer Varnish and Memcached, and most Acquia customers can also leverage the free Platform CDN or the enterprise-grade Edge CDN